The Rise of Local LLMs – what to look out for in 2024

During the holidays, as I took a short break from building AI at Zefort, I found myself reflecting on the whirlwind year that was 2023 in the world of Large Language Models (LLMs) – the underlying technology that enables generative AI solutions, such as ChatGPT.

As we now set our sights on the year ahead, I want to share some thoughts that I think will shed light on an important trajectory in 2024, especially for anyone interested in privacy and customized AI solutions.

Looking back a year ago, ChatGPT had just been launched and we were busy unpacking its implications. Come March 2023, OpenAI introduced GPT-4 to the public, and it seemed a certainty that the AI revolution would manifest in ever larger models, guarded behind APIs and veiled in growing secrecy, which would lead to a monopolization of AI by a few big industry players.

“The real revolution did not so much come from ever larger, more cognitively powerful models. Instead, we saw an outpouring of LLMs released openly by various labs by the month, sometimes even more often. The tide had unexpectedly turned towards openness.”

However, a look back at 2023 reveals a different story. The real revolution did not so much come from ever larger, more cognitively powerful models. Instead, we saw an outpouring of LLMs released openly by various labs by the month, sometimes even more often. The tide had unexpectedly turned towards openness.

When OpenAI launched GPT-3 in 2020, they set a precedent by not releasing the actual model for download, as was customary at the time, but rather make it available only to vetted users through an API, in the name of responsibility and safety. Ever since then, big tech companies have shown less readiness in making generative models openly available. Meanwhile, there has been much criticism that not being able to download and study models directly is prohibitive to AI safety research.

In February of 2023, in the middle of the ChatGPT-focused frenzy, Meta released a model called LLaMA. It was offered for download only to researchers, presumably in an attempt to strike a balance between openness and safety concerns. Nevertheless, within days it was leaked onto the public internet, and this became the first catalyst of the open LLM revolution.

As a 65-billion-parameter model, it was considerably smaller than several previously released open LLMs, yet its performance was commendable. It outperformed many of the much larger models, such as the open BLOOM and the closed GPT-3 models. Precisely its smaller size turned out to be a game changer, because it made it much more accessible and practical to use, the smallest version being 7B parameters. The LLaMA model was swiftly followed by the Alpaca model from Stanford University and Vicuna from UC Berkeley, which were versions fine-tuned to follow instructions and dialogue. It became the foundational building block for a wide range of derivative models, sparking a community-led counter-offensive to ChatGPT.

While the LLaMA model was out in the wild and ‘open’ in the sense that it now could be downloaded and run locally, it was still licensed only for research purposes. In May and June, MosaicML took open LLMs a step further as they released the MPT models. Similar to the smaller versions of LLaMA, they were offered in accessible 7B and 30B sizes, but were permitted for commercial use. Shortly after, the Falcon models by the Technology Innovation Institute of the UAE followed their lead. These models could now be run locally not only by researchers and hobbyists, but also by businesses.

These releases were shortly followed by the release of LLaMA-2 in July, which became another turning point, as it demonstrated capabilities in the same league as ChatGPT-3.5, and, this time Meta chose to allow commercial use. By this point, it was becoming clear that the choice of running a local LLM, for instance, for the sake of privacy or customizability, did not have to mean a big trade-off on quality. While GPT-4 remained the apex model, more capable at tasks involving complex reasoning, LLaMA-2 turned out to be sufficient for many practical applications, such as answering questions based on document sources. It also supports a broad set of languages, which currently sets it apart from most other open models.

In September, a new kid on the block, MistralAI, a French start-up, released a 7B model that was able to outperform much larger models, including the 34B version of LLaMA-2. By the end of the year, they again surprised with the release of the 56B Mixtral model, open for commercial use. Despite being medium size, it was able to beat GPT-3.5 and rival GPT-4 on a range of evaluation benchmarks. Mixtral supports 5 major European languages, which is broader than most models, but still less than LLaMA covers.

Alongside all these model releases, and many more that I have not covered, there has been tremendous community efforts that aim to make LLMs more accessible and practical to tinker with. This push to democratize LLMs includes methods for model compression and efficient fine-tuning, allowing for inference and adaptation even on consumer-grade hardware. On the data front, community efforts such as OpenAssistant and ShareGPT have arisen that crowd-source datasets for instruction following, while recent research has shown that highly competitive instruction-following models can be trained also with very small datasets (e.g., Meta’s LIMA) or synthetic data generated by other LLMs (e.g., Microsoft’s Orca-2).

This indicates that neither having enormous amounts of data nor compute will provide the big players in the LLM field any guarantee of maintaining dominance, or as a Googler’s internal memo put it: “We have no moat, and neither does OpenAI”, and that open source “has been quietly eating our lunch”, referring to the fact that it iterates much faster than a single organization with a huge monolithic model can do. Neither do I think that growing model sizes alone will take us to the next level, as that has seldom been the case in the history of NLP; innovation of model architectures is generally also needed.

So, what does this mean for individuals and businesses at large?

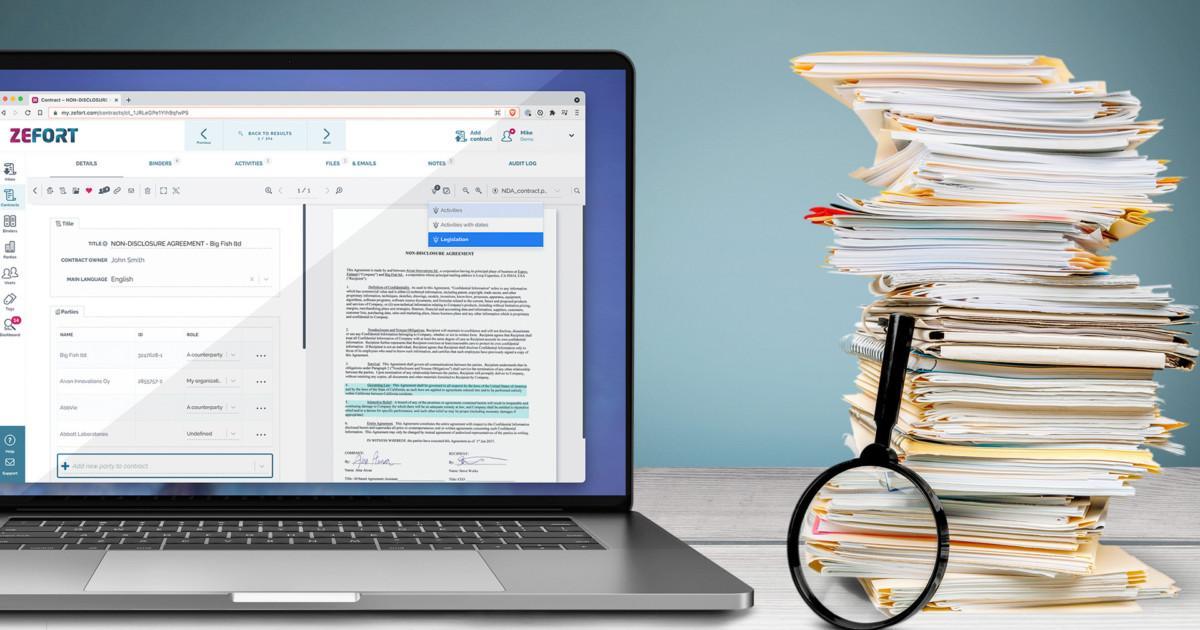

Simply put, the accelerating community development and increasing ease of running capable LLMs locally present exciting opportunities for highly privacy-sensitive and domain-specific applications. It opens up use cases that the likes of OpenAI, Google or Anthropic cannot serve with their current business models. At Zefort, we are particularly excited about having these powerful tools at our disposal as we continue to explore document understanding and question answering for contracts with an emphasis on privacy, verifiability and ease of use in order to empower the user with AI.

“The feverish development in all directions will most likely not diminish, and we can expect to be surprised. My bet is that we will see yet more freedom in building powerful AI locally, which can connect into an emerging Web of AIs.”

Looking at 2024, I, for one, am eagerly waiting for what the year will bring, be it the release of LLaMA-3, other models, or other kinds of innovation. While OpenAI is doing their best to build an ecosystem and community around custom GPTs and the accompanying GPT store (think apps running on ChatGPT, i.e., hosted by OpenAI and behind subscription), there is a significant segment they cannot serve. The feverish development in all directions will most likely not diminish, and we can expect to be surprised. My bet is that we will see yet more freedom in building powerful AI locally, which can connect into an emerging Web of AIs. These AIs can each be more specialized and learn to leverage each other for specific purposes. The Local LLMs offer hope that the revolution will not be centralized.

For more in-depth reading, see:

Samuel Rönnqvist is the Machine Learning Lead at Zefort, where he is heading AI research and development related to document understanding for contracts. He holds a PhD in natural language processing and has been active as a researcher in the field for more than a decade. Since an early stage, he has been working on deep learning and pioneered its use in financial stability monitoring, through text mining of news. In 2018, he started working on neural language generation at University of Turku, Finland, and Goethe University Frankfurt, Germany, collaborating on controlled news generation with the STT News Agency through the Google News Initiative, as well as working on a project that produced the first fully generated, published scientific book in collaboration with Springer Publishing.