Explainable NLP with attention

AI algorithms solve problems that are intrinsically difficult to explain

The very reason we use AI is to deal with complex problems – problems one cannot adequately solve with traditional computer programs.

Should you trust an AI algorithm, when you cannot even explain how it works?

The “how” part is the problem with this line of thinking. Can you explain how, exactly, your brain decided, with all those neurons and synapses and neurotransmitters and chemicals, to have a cup of tea instead of a coffee?

Of course, you cannot. However, you may be able to explain *why* you chose tea: you’ve already had two coffees today, and you think a nice Earl Gray would agree with you better.

The “how” and the “why”: model-centric versus data-centric explanations

Likewise, explaining how an AI algorithm makes decisions is an unreasonable request, akin to explaining how your brain works. Of course, there are numerous ML algorithms that can be satisfactorily explained to a fellow scientist. It is much harder to explain them to a layman, which is the goal for explainable AI.

The algorithm of your brain is about the detailed structure of the brain and how the synapses work together. It might be easier to think about the history of your beverage choices, available flavors, etc – the data.

Ask a researcher about how their ML model works and you’re in for a complicated math lesson. Alternatively, the researcher could choose to explain why their ML model works by describing the input data, model training and performance statistics. To put it simply, answering the “how” question is about understanding the model. Answering the “why” is often more practical.

Explainability can mean different things to different people

Explainable AI has stirred some controveries among the researchers and developers, both as a concept and a general aim. The discussion is further complicated by the fact that people have different views on what is explainability and what is not. Some prefer to differentiate between explainability and interpretability, while others use the terms interchangeably.

One example is the debate around paper called “Attention is not explanation” by Jain & Wallace.

…models equipped with attention provide a distribution over attended-to input units, and this is often presented (at least implicitly) as communicating the relative importance of inputs. … Our findings show that standard attention modules do not provide meaningful explanations and should not be treated as though they do.

Sarthat Jain and Byron C. Wallace in “Attention is not explanation” (2019)

A recent paper claims that `Attention is not Explanation’ (Jain and Wallace, 2019). We challenge many of the assumptions underlying this work, arguing that such a claim de-pends on one’s definition of explanation.

Sarah Wiegreffe and Yuval Pinter in “Attention is not not explanation” (2019)

The disagreement stems, I think, from the fact that explainability is not really well defined. For some people it means the explaining the algorithm, for other’s it’s more about the data

For what it’s worth, I’m firmly in the camp that in many forms of NLP, attention is a usable form of explanation. Attention shows what words the model concentrates on. We humans are pretty good at looking at this kind of data and draw some intuitive conclusions.

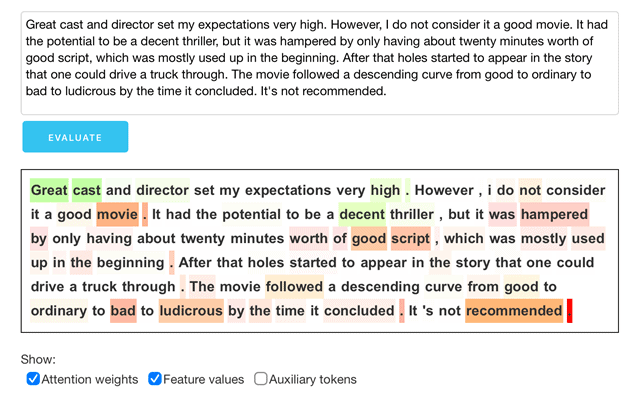

You can try it yourself with this demo: https://ulmfit.purecode.pl

Explainability in Zefort

At Zefort, we’re building systems to analyze large bodies of legal documents, among other things. The Zefort SaaS product comes with various built-in modules that run NLP models on legal texts. One of these is the “insights“ module, which can be used to quickly identify and find parts discussing a particular topic in a large body of documentation. Such topics include governing law, transferability clauses, parts relevant to GDPR compliance, and so on.

We’re also working on a module where the users can define their own topics relevant to their own business. Users can give a few examples of the things they’d like to find, and Zefort trains a ML model to find more. It is especially in this context we’ve found that attention can really help the user see if the algorithm is basing decisions on words that are really relevant. If it’s not, you probably need to give it more examples so it can learn a better model.

If you’re working with NLP based models, I’d encourage you to see if attention would be a useful explainability mechanism in your product.

The article was originally published at AIGA blog 10th February 2022.